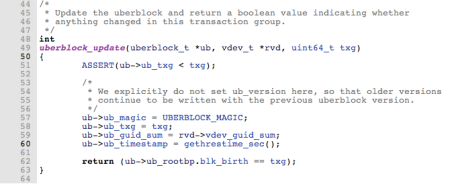

In my last blog entry, I described how to use DTrace to learn more about how and where the ZFS uberblock is written. I showed that an uberblock update causes 4 writes to 4 different areas, and only after 128 write cycles will an old uberblock be overwritten by a new one. Now, using zdb, I will show how often various zpools update their uberblocks so we can examine after which time a USB flash without wear leveling would probably be worn out.

Using zdb -uuuv, as in the following script:

#!/bin/ksh

TMPDIR=/var/tmp

LOGFILE=${TMPDIR}/ub-stats.out

echo "-----" >> ${LOGFILE}

date +"date+time: %Y-%m-%d %H:%M:%S" >> ${LOGFILE}

for ZPOOL in $(zpool list -o name -H); do

echo ${ZPOOL} >> ${LOGFILE}

zdb -uuuv ${ZPOOL} >> ${LOGFILE}

done

, we can show important information about the uberblocks of each zpool and write it to a file (for example once per minute, per crontab). A timestamp is useful for further analysis.

After some some hours or days, we can extract the data of certain zpools, import it into a spreadsheet and create graphs to show how often new uberblocks are written to disk. Here’s how we can print the data for rpool:

nawk '/date/{date=$2; time=$3}

/pool/{pool=$NF}

/txg/{printf ("%s %s\t%s\t%s\n", date, time, pool, $NF)}' ub-stats.out

A sample output might look like:

2009-01-06 22:14:00 dpool 66273 2009-01-06 22:14:00 dpool-2 58707 2009-01-06 22:14:00 rpool 33165 2009-01-06 22:15:00 dpool 66273 2009-01-06 22:15:00 dpool-2 58707 2009-01-06 22:15:00 rpool 33167 2009-01-06 22:16:00 dpool 66273 2009-01-06 22:16:00 dpool-2 58707 2009-01-06 22:16:00 rpool 33169 2009-01-06 22:17:00 dpool 66273 2009-01-06 22:17:00 dpool-2 58707 2009-01-06 22:17:00 rpool 33171

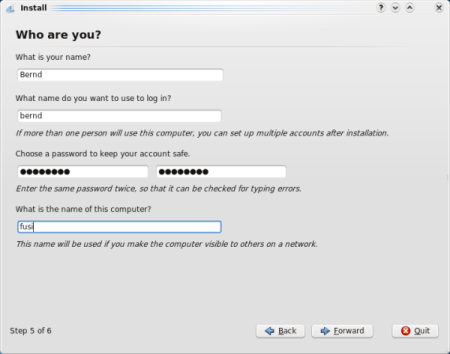

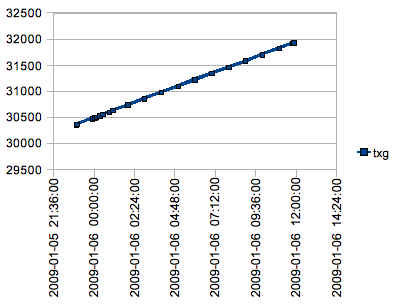

We can easily extract just one pool, using nawk ‘$3=="dpool"{print}’, for example, read the resulting file into OpenOffice, and create a graph of type x/y to show the txg numbers over time. For rpool, I got the following picture:

The data (you can even see it in the above table) shows that for rpool, txg is incremented at about two times per minute. Each additional manual "sync" command increments txg by one and also writes the uberblock to disk. Assuming that a USB flash drive without wear leveling wears out after data is written to the same location 10.000 times (which is the minimum guaranteed number of cycles for a standard USB flash drive mentioned in this document), knowing that the same block is overwritten 2*60*24/128 = less than 23 times after one day, we can calculate 10.000/22.5 to get the number of days until such a USB stick would be worn out. The result is about 444 days (=1 year 79 days). Assuming the USB stick is worn out only after 100.000 writes to the same location, the result would be 4444 days which is more than 12 years! At the end of this blog entry, I will explain why the USB stick might even live much longer than those 444 or 4444 days.

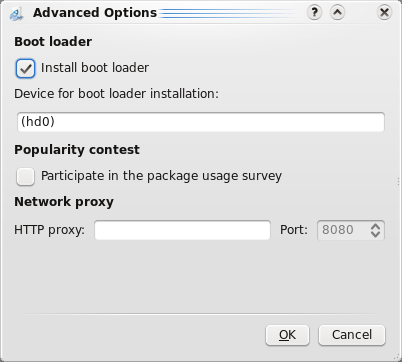

Interestingly, for another pool, name dpool, I got a completely different graph:

What I know is that on this pool, at about 06:00 am, a small number of files (about 10 to 20) is been created, again at 07:00 am, and during other times, there is no or not much write activity. Using another dtrace script, we can watch in real time and see that the uberblock’s txg number is indeed increased every 30 seconds but only written to disk when there is a change to the pool to which it belongs or, for example, when a sync command is issued on the command line. Here’s the script I used (a colleague recommended to also include spa_sync as this is the call for synching a transaction group, so I added two more probes for watching these events):

io:genunix:bdev_strategy:start

{

printf ("%Y %s %d %d", walltimestamp, execname, args[0]->b_blkno, args[0]->b_bcount);

}

fbt:zfs:spa_sync:entry

{

printf ("%Y %s %d", walltimestamp, execname, args[1]);

}

fbt:zfs:spa_sync:return,

fbt:zfs:vdev_uberblock_sync:entry

{

printf ("%Y %s", walltimestamp, execname);

}

fbt:zfs:uberblock_update:entry

{

printf ("%Y %d %d %d", walltimestamp,

args[0]->ub_rootbp.blk_birth, args[0]->ub_rootbp.blk_fill,

args[2]);

}

fbt:zfs:uberblock_update:return

{

printf ("%Y %d %d", walltimestamp, args[0], args[1]);

}

And here’s a sample output. I removed certain columns, including the hour (19 p.m.) from the original output. The times are minutes:seconds. For better readability, I have marked all entries and comments regarding writing the uberblock update for rpool to disk in green color, and all actions I performed in another terminal window while the dtrace script was running in red color.

spa_sync:entry 11:09 sched 41533 <- spa_sync() rpool bdev_strategy:start 11:09 sched 25671451 512 bdev_strategy:start 11:09 sched 4310260 512 bdev_strategy:start 11:09 sched 25571476 3072 bdev_strategy:start 11:09 sched 25671452 14848 spa_sync:entry 11:09 sched 69698 <- spa_sync() dpool-2 uberblock_update:entry 11:09 67082 178 69698 <- updating dpool-2. txg != blk_birth! uberblock_update:return 11:09 85 0 <- args[1]=0: no sync! spa_sync:return 11:09 sched spa_sync:entry 11:09 sched 76452 <- spa_sync() dpool uberblock_update:entry 11:09 75007 122 76452 <- updating dpool. Note that txg is 76452 whereas blk_birth is 75007: means that this file system has not been changed for (76452-75007)/2+1 = 723m 30s = 12h 3m 30s So the last change to dpool was at about 7:08 am. This is exactly the time stamp as shown in zdb -uuuv dpool. And the txg number shown in that output is the one that is read from disk, so it is 75007: (zdb output follows; dtrace output will be continued below) --- $ zdb -uuuv dpool Uberblock magic = 0000000000bab10c version = 13 txg = 75007 guid_sum = 10502025224250020770 timestamp = 1231481321 UTC = Fri Jan 9 07:08:41 2009 rootbp = [L0 DMU objset] 400L/200P DVA[0]=<0:fd8c54a00:200> DVA[1]=<0:1f8b7f8800:200> DVA[2]=<0:c02e0600:200> fletcher4 lzjb LE contiguous birth=75007 fill=122 cksum=a0e45aac0:42a5af596ce:e0b8e86ece60:201ef17f0b98da --- (dtrace output continued) uberblock_update:return 11:09 85 0 <- args[1]=0: no sync! spa_sync:return 11:09 sched bdev_strategy:start 11:09 sched 4310261 27136 bdev_strategy:start 11:09 sched 25571482 15360 . ... some more IO of various blocks and sizes . bdev_strategy:start 11:09 sched 25671511 512 bdev_strategy:start 11:09 sched 25671517 5632 uberblock_update:entry 11:09 41533 159 41533 <- updating rpool. txg == blk_birth! uberblock_update:return 11:09 85 1 <- args[1]=1: sync! vdev_uberblock_sync:entry 11:09 sched bdev_strategy:start 11:09 sched 378 1024 <- write to block #378!

bdev_strategy:start 11:09 sched 890 1024 bdev_strategy:start 11:09 sched 39005050 1024 bdev_strategy:start 11:09 sched 39005562 1024 spa_sync:return 11:09 sched spa_sync:entry 11:39 sched 41534 <- spa_sync() rpool uberblock_update:entry 11:39 41533 159 41534 <- updating rpool. txg != blk_birth! uberblock_update:return 11:39 85 0 <- args[1]=0: no sync! skip writing to block #380!

spa_sync:return 11:39 sched spa_sync:entry 11:39 sched 76453 <- spa_sync() dpool uberblock_update:entry 11:39 75007 122 76453 uberblock_update:return 11:39 85 0 spa_sync:return 11:39 sched spa_sync:entry 11:39 sched 69699 <- spa_sync() dpool-2 uberblock_update:entry 11:39 67082 178 69699 uberblock_update:return 11:39 85 0 spa_sync:return 11:39 sched spa_sync:entry 12:09 sched 69700 <- spa_sync() dpool-2 uberblock_update:entry 12:09 67082 178 69700 uberblock_update:return 12:09 85 0 spa_sync:return 12:09 sched spa_sync:entry 12:09 sched 76454 <- spa_sync() dpool uberblock_update:entry 12:09 75007 122 76454 uberblock_update:return 12:09 85 0 spa_sync:return 12:09 sched spa_sync:entry 12:09 sched 41535 <- spa_sync() rpool bdev_strategy:start 12:09 sched 25571530 1024 bdev_strategy:start 12:09 sched 4310376 1024 . ... some more IO of various blocks and sizes . bdev_strategy:start 12:09 sched 25671620 512 bdev_strategy:start 12:09 sched 25671626 5120 uberblock_update:entry 12:09 41535 159 41535 <- updating rpool. txg == blk_birth! uberblock_update:return 12:09 85 1 <- args[1]=1: sync! vdev_uberblock_sync:entry 12:09 sched bdev_strategy:start 12:09 sched 382 1024 <- write to block #382!

bdev_strategy:start 12:09 sched 894 1024 bdev_strategy:start 12:09 sched 39005054 1024 bdev_strategy:start 12:09 sched 39005566 1024 spa_sync:return 12:09 sched

(sync command issued in another terminal window)

(end sync command)

spa_sync:entry 12:23 sched 76455 <- spa_sync() dpool uberblock_update:entry 12:23 75007 122 76455 uberblock_update:return 12:23 85 0 spa_sync:return 12:23 sched spa_sync:entry 12:23 sched 69701 <- spa_sync() dpool-2 uberblock_update:entry 12:23 67082 178 69701 uberblock_update:return 12:23 85 0 spa_sync:return 12:23 sched spa_sync:entry 12:23 sched 41536 <- spa_sync() rpool bdev_strategy:start 12:23 sched 25671636 1536 bdev_strategy:start 12:23 sched 25671639 2560 . ... some more IO of various blocks and sizes . bdev_strategy:start 12:23 sched 25671718 3584 bdev_strategy:start 12:23 sched 25682884 2048 uberblock_update:entry 12:23 41536 159 41536 <- updating rpool. txg == blk_birth! uberblock_update:return 12:23 85 1 <- args[1]=1: sync! vdev_uberblock_sync:entry 12:23 sched bdev_strategy:start 12:23 sched 384 1024 <- write to next block, #384!

bdev_strategy:start 12:23 sched 896 1024 bdev_strategy:start 12:23 sched 39005056 1024 bdev_strategy:start 12:23 sched 39005568 1024 spa_sync:return 12:23 sched bdev_strategy:start 12:53 sched 234913576 12288 bdev_strategy:start 12:53 sched 234910656 16384 spa_sync:entry 12:53 sched 41537 <- spa_sync() rpool bdev_strategy:start 12:53 sched 25671725 14848 bdev_strategy:start 12:53 sched 25571590 1024 bdev_strategy:start 12:53 sched 4310548 1024 bdev_strategy:start 12:53 sched 25571588 1024 spa_sync:entry 12:53 sched 69702 <- spa_sync() dpool-2 bdev_strategy:start 12:53 sched 234915243 512 bdev_strategy:start 12:53 sched 306224021 512 bdev_strategy:start 12:53 sched 234913640 12288 bdev_strategy:start 12:53 sched 234915250 1024 spa_sync:entry 12:53 sched 76456 <- spa_sync() dpool uberblock_update:entry 12:53 75007 122 76456 uberblock_update:return 12:53 85 0 spa_sync:return 12:53 sched bdev_strategy:start 12:53 sched 234915252 1536 bdev_strategy:start 12:53 sched 234915256 7168 . ... some more IO of various blocks and sizes . bdev_strategy:start 12:53 sched 379625154 1536 bdev_strategy:start 12:53 sched 379625160 4608 uberblock_update:entry 12:53 41537 159 41537 <- updating rpool. txg == blk_birth! uberblock_update:return 12:53 85 1 <- args[1]=1: sync! uberblock_update:entry 12:53 69702 178 69702 uberblock_update:return 12:53 85 1 <- args[1]=1: sync! vdev_uberblock_sync:entry 12:53 sched bdev_strategy:start 12:53 sched 396 1024 bdev_strategy:start 12:53 sched 908 1024 bdev_strategy:start 12:53 sched 390704524 1024 bdev_strategy:start 12:53 sched 390705036 1024 spa_sync:return 12:53 sched vdev_uberblock_sync:entry 12:54 sched bdev_strategy:start 12:54 sched 386 1024 <- write to next block, #386

bdev_strategy:start 12:54 sched 898 1024 bdev_strategy:start 12:54 sched 39005058 1024 bdev_strategy:start 12:54 sched 39005570 1024 spa_sync:return 12:54 sched (wrote file in dpool-2, with :w in vi) 0 6668 bdev_strategy:start 2009 Jan 9 19:13:08 sched 234913600 20480 (done writing) spa_sync:entry 13:23 sched 41538 <- spa_sync() rpool uberblock_update:entry 13:23 41537 159 41538 <- updating rpool. txg != blk_birth! uberblock_update:return 13:23 85 0 <- args[1]=0: no sync! skip writing to block #388!

spa_sync:return 13:23 sched spa_sync:entry 13:23 sched 69703 <- spa_sync() dpool-2 bdev_strategy:start 13:23 sched 234915299 512 bdev_strategy:start 13:23 sched 306224077 512 bdev_strategy:start 13:23 sched 234915300 512 bdev_strategy:start 13:23 sched 234915302 1024 spa_sync:entry 13:23 sched 76457 <- spa_sync() dpool uberblock_update:entry 13:23 75007 122 76457 uberblock_update:return 13:23 85 0 spa_sync:return 13:23 sched bdev_strategy:start 13:23 sched 234915304 1536 bdev_strategy:start 13:23 sched 234915308 7168 . ... some more IO of various blocks and sizes . bdev_strategy:start 13:23 sched 379625170 6144 bdev_strategy:start 13:23 sched 379625184 6144 uberblock_update:entry 13:23 69703 178 69703 <- updating dpool-2. txg == blk_birth! uberblock_update:return 13:23 85 1 <- args[1]=1: sync! vdev_uberblock_sync:entry 13:23 sched bdev_strategy:start 13:23 sched 398 1024 bdev_strategy:start 13:23 sched 910 1024 bdev_strategy:start 13:23 sched 390704526 1024 bdev_strategy:start 13:23 sched 390705038 1024 spa_sync:return 13:23 sched (rm file in dpool-2)

(done rm) spa_sync:entry 13:53 sched 69704 <- spa_sync() dpool-2 bdev_strategy:start 13:53 sched 234915348 512 bdev_strategy:start 13:53 sched 306224124 512 bdev_strategy:start 13:53 sched 234915364 1024 bdev_strategy:start 13:53 sched 306224140 1024 spa_sync:entry 13:53 sched 76458 <- spa_sync() dpool uberblock_update:entry 13:53 75007 122 76458 uberblock_update:return 13:53 85 0 spa_sync:return 13:53 sched spa_sync:entry 13:53 sched 41539 <- spa_sync() rpool bdev_strategy:start 13:53 sched 25571606 15360 bdev_strategy:start 13:53 sched 25571642 1024 . ... some more IO of various blocks and sizes . bdev_strategy:start 13:53 sched 379625196 6144 bdev_strategy:start 13:53 sched 379625210 6144 uberblock_update:entry 13:53 69704 178 69704 <- updating dpool-2. txg == blk_birth! uberblock_update:return 13:53 85 1 <- args[1]=1: sync! bdev_strategy:start 13:53 sched 25671818 2560 bdev_strategy:start 13:53 sched 4310632 2560 . ... some more IO of various blocks and sizes . bdev_strategy:start 13:53 sched 25671823 3584 bdev_strategy:start 13:53 sched 25682892 2048 uberblock_update:entry 13:53 41539 159 41539 <- updating rpool. txg == blk_birth! uberblock_update:return 13:53 85 1 <- args[1]=1: sync! vdev_uberblock_sync:entry 13:53 sched bdev_strategy:start 13:53 sched 400 1024 bdev_strategy:start 13:53 sched 912 1024 bdev_strategy:start 13:53 sched 390704528 1024 bdev_strategy:start 13:53 sched 390705040 1024 spa_sync:return 13:53 sched vdev_uberblock_sync:entry 13:54 sched bdev_strategy:start 13:54 sched 390 1024 <- write to block #390

bdev_strategy:start 13:54 sched 902 1024 bdev_strategy:start 13:54 sched 39005062 1024 bdev_strategy:start 13:54 sched 39005574 1024 spa_sync:return 13:54 sched spa_sync:entry 14:23 sched 41540 <- spa_sync() rpool uberblock_update:entry 14:23 41539 159 41540 <- updating rpool. txg != blk_birth! uberblock_update:return 14:23 85 0 <- args[1]=0: no sync! skip writing to block #392!

spa_sync:return 14:23 sched spa_sync:entry 14:23 sched 69705 <- spa_sync() dpool-2 uberblock_update:entry 14:23 69704 178 69705 <- updating dpool-2. txg != blk_birth! uberblock_update:return 14:23 85 0 <- args[1]=0: no sync! spa_sync:return 14:23 sched spa_sync:entry 14:23 sched 76459 <- spa_sync() dpool uberblock_update:entry 14:23 75007 122 76459 <- updating dpool. txg != blk_birth! uberblock_update:return 14:23 85 0 <- args[1]=0: no sync! spa_sync:return 14:23 sched spa_sync:entry 14:53 sched 76460 <- spa_sync() dpool uberblock_update:entry 14:53 75007 122 76460 <- updating dpool. txg != blk_birth! uberblock_update:return 14:53 85 0 <- args[1]=0: no sync! spa_sync:return 14:53 sched spa_sync:entry 14:53 sched 69706 <- spa_sync() dpool-2 uberblock_update:entry 14:53 69704 178 69706 <- updating dpool-2. txg != blk_birth! uberblock_update:return 14:53 85 0 <- args[1]=0: no sync! spa_sync:return 14:53 sched spa_sync:entry 14:53 sched 41541 <- spa_sync() rpool uberblock_update:entry 14:53 41539 159 41541 <- updating rpool. txg != blk_birth! uberblock_update:return 14:53 85 0 <- args[1]=0: no sync! skip writing to block #394!

spa_sync:return 14:53 sched spa_sync:entry 15:23 sched 41542 <- spa_sync() rpool uberblock_update:entry 15:23 41539 159 41542 <- updating rpool. txg != blk_birth! uberblock_update:return 15:23 85 0 <- args[1]=0: no sync! skip writing to block #396!

spa_sync:return 15:23 sched spa_sync:entry 15:23 sched 69707 <- spa_sync() dpool-2 uberblock_update:entry 15:23 69704 178 69707 <- updating dpool-2. txg != blk_birth! uberblock_update:return 15:23 85 0 <- args[1]=0: no sync! spa_sync:return 15:23 sched spa_sync:entry 15:23 sched 76461 <- spa_sync() dpool uberblock_update:entry 15:23 75007 122 76461 <- updating dpool. txg != blk_birth! uberblock_update:return 15:23 85 0 <- args[1]=0: no sync! spa_sync:return 15:23 sched (created new file in rpool, using the touch command)

(done creating file)

spa_sync:entry 15:53 sched 76462 <- spa_sync() dpool uberblock_update:entry 15:53 75007 122 76462 <- updating dpool. txg != blk_birth! uberblock_update:return 15:53 85 0 <- args[1]=0: no sync! spa_sync:return 15:53 sched spa_sync:entry 15:53 sched 69708 <- spa_sync() dpool-2 uberblock_update:entry 15:53 69704 178 69708 <- updating dpool-2. txg != blk_birth! uberblock_update:return 15:53 85 0 <- args[1]=0: no sync! spa_sync:return 15:53 sched spa_sync:entry 15:53 sched 41543 <- spa_sync() rpool bdev_strategy:start 15:53 sched 25571658 15360 bdev_strategy:start 15:53 sched 25571688 1024 . ... some more IO of various blocks and sizes . bdev_strategy:start 15:53 sched 25671855 4096 bdev_strategy:start 15:53 sched 25682908 2048 uberblock_update:entry 15:53 41543 159 41543 <- updating rpool. txg == blk_birth! uberblock_update:return 15:53 85 1 <- args[1]=1: sync! vdev_uberblock_sync:entry 15:53 sched bdev_strategy:start 15:53 sched 398 1024 <- write to block #398! bdev_strategy:start 15:53 sched 910 1024 bdev_strategy:start 15:53 sched 39005070 1024 bdev_strategy:start 15:53 sched 39005582 1024 spa_sync:return 15:54 sched

I think this is a very nice example of what you can find out with DTrace. You may ask, "Why did the rpool graph at the top of this blog entry show an update in rpool 2 times per minute whereas the DTrace output shows an update not that often (which, by the way, would further extend the life of the USB flash drive…)?" This is because the output of the zdb script was written to file in /var/tmp every minute, causing a change in rpool more often than during the run time of the DTrace script!